David Holzmüller

I am a starting researcher in the SODA team at INRIA Saclay.

E-Mail: firstname.lastname@inria.fr (replace ü by u)

Research interests

I am currently interested in machine learning for tabular data. With my coauthors, I have recently introduced strong tabular classification and regression methods (RealMLP, TabICL, xRFM) as well as the TabArena benchmark. Additionally, I am interested in uncertainty quantificiation, active learning, and expanding the scope of meta-learned tabular foundation models. Previously, I have worked on various topics including active learning, interatomic potentials, machine learning theory (neural tangent kernels, double descent, benign overfitting, non-log-concave sampling), and other things.

Talks:

Tabular data

Active learning

- Active Learning for Science (AMLAB Seminar, 2025, slides)

Theory

Generalization theory of linearized neural networks (MPI MIS + UCLA seminar, slides and video)

Papers

ML for Tabular Data

David Holzmüller, Léo Grinsztajn, and Ingo Steinwart, Better by Default: Strong Pre-Tuned MLPs and Boosted Trees on Tabular Data, Neural Information Processing Systems, 2024. https://arxiv.org/abs/2407.04491

Jingang Qu, David Holzmüller, Gaël Varoquaux, and Marine Le Morvan, TabICL: A Tabular Foundation Model for In-Context Learning on Large Data, International Conference on Machine Learning, 2025. https://arxiv.org/abs/2502.05564

Nick Erickson, Lennart Purucker, Andrej Tschalzev, David Holzmüller, Prateek Mutalik Desai, David Salinas, and Frank Hutter, TabArena: A Living Benchmark for Machine Learning on Tabular Data, Neural Information Processing Systems (spotlight), 2025. https://arxiv.org/abs/2506.16791

Daniel Beaglehole, David Holzmüller, Adityanarayanan Radhakrishnan, and Mikhail Belkin, xRFM: Accurate, scalable, and interpretable feature learning models for tabular data, arXiv:2508.10053, 2025. https://arxiv.org/abs/2508.10053

Uncertainty Quantification

Eugène Berta, David Holzmüller, Michael I. Jordan, and Francis Bach, Rethinking Early Stopping: Refine, Then Calibrate, arXiv:2501.19195, 2025. https://arxiv.org/abs/2501.19195

Active Learning

David Holzmüller, Viktor Zaverkin, Johannes Kästner, and Ingo Steinwart, A Framework and Benchmark for Deep Batch Active Learning for Regression, Journal of Machine Learning Research, 2023. https://arxiv.org/abs/2203.09410

Daniel Musekamp, Marimuthu Kalimuthu, David Holzmüller, Makoto Takamoto, Mathias Niepert, Active Learning for Neural PDE Solvers, 2024. https://arxiv.org/abs/2408.01536

Viktor Zaverkin, David Holzmüller, Ingo Steinwart, and Johannes Kästner, Exploring chemical and conformational spaces by batch mode deep active learning, Digital Discovery, 2022. https://doi.org/10.1039/D2DD00034B

Viktor Zaverkin, David Holzmüller, Henrik Christiansen, Federico Errica, Francesco Alesiani, Makoto Takamoto, Mathias Niepert, and Johannes Kästner, Uncertainty-biased molecular dynamics for learning uniformly accurate interatomic potentials, npj Computational Materials, 2024. https://www.nature.com/articles/s41524-024-01254-1

NN Theory

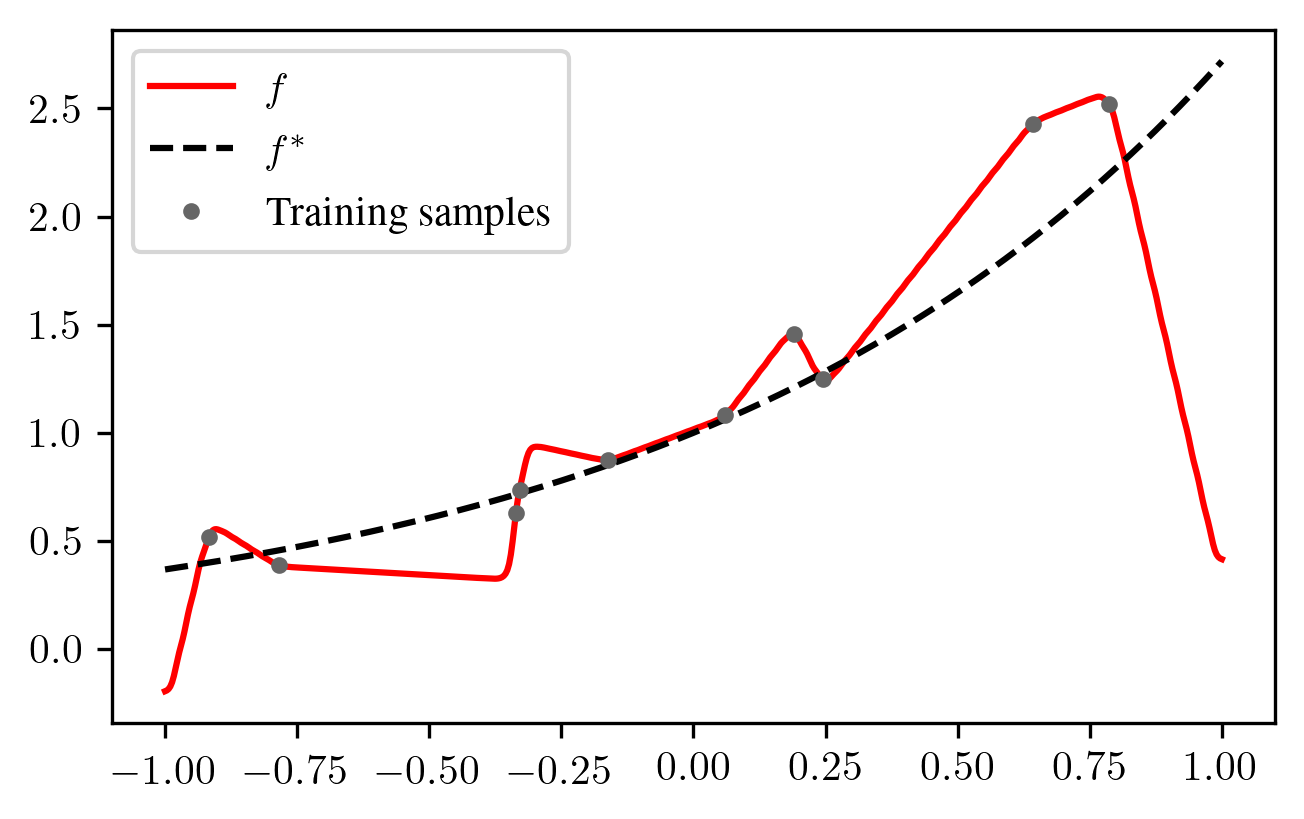

Moritz Haas*, David Holzmüller*, Ulrike von Luxburg, and Ingo Steinwart, Mind the spikes: Benign overfitting of kernels and neural networks in fixed dimension, Neural Information Processing Systems, 2023. https://proceedings.neurips.cc/paper_files/paper/2023/hash/421f83663c02cdaec8c3c38337709989-Abstract-Conference.html

David Holzmüller, On the Universality of the Double Descent Peak in Ridgeless Regression, International Conference on Learning Representations, 2021. https://openreview.net/forum?id=0IO5VdnSAaH

David Holzmüller, Ingo Steinwart, Training Two-Layer ReLU Networks with Gradient Descent is Inconsistent, Journal of Machine Learning Research, 2022. https://jmlr.org/papers/v23/20-830.html

Sampling Theory

David Holzmüller and Francis Bach, Convergence rates for non-log-concave sampling and log-partition estimation, 2023. https://arxiv.org/abs/2303.03237

Other atomistic ML

Viktor Zaverkin*, David Holzmüller*, Ingo Steinwart, and Johannes Kästner, Fast and Sample-Efficient Interatomic Neural Network Potentials for Molecules and Materials Based on Gaussian Moments, J. Chem. Theory Comput. 17, 6658–6670, 2021. https://arxiv.org/abs/2109.09569

Viktor Zaverkin, David Holzmüller, Luca Bonfirraro, and Johannes Kästner, Transfer learning for chemically accurate interatomic neural network potentials, 2022. https://arxiv.org/abs/2212.03916

Viktor Zaverkin, David Holzmüller, Robin Schuldt, and Johannes Kästner, Predicting properties of periodic systems from cluster data: A case study of liquid water, J. Chem. Phys. 156, 114103, 2022. https://aip.scitation.org/doi/full/10.1063/5.0078983

Other

Marimuthu Kalimuthu, David Holzmüller, and Mathias Niepert, LOGLO-FNO: Efficient Learning of Local and Global Features in Fourier Neural Operators, ICLR 2025 Workshop on Machine Learning Multiscale Processes (oral), 2025.

David Holzmüller and Dirk Pflüger, Fast Sparse Grid Operations Using the Unidirectional Principle: A Generalized and Unified Framework, 2021. In: Bungartz, HJ., Garcke, J., Pflüger, D. (eds) Sparse Grids and Applications - Munich 2018. Lecture Notes in Computational Science and Engineering, vol 144. Springer, Cham. https://link.springer.com/chapter/10.1007/978-3-030-81362-8_4

Daniel F. B. Haeufle, Isabell Wochner, David Holzmüller, Danny Driess, Michael Günther, Syn Schmitt, Muscles Reduce Neuronal Information Load: Quantification of Control Effort in Biological vs. Robotic Pointing and Walking, 2020. https://www.frontiersin.org/articles/10.3389/frobt.2020.00077/full

David Holzmüller, Improved Approximation Schemes for the Restricted Shortest Path Problem, 2017. https://arxiv.org/abs/1711.00284

David Holzmüller, Efficient Neighbor-Finding on Space-Filling Curves, 2017. https://arxiv.org/abs/1710.06384

Short CV

- since 2025: Starting researcher at INRIA Saclay, SODA team

- since 2023: Postdoc at INRIA Paris, co-advised by Francis Bach and Gaël Varoquaux

- April 2022 - July 2022: Research visit at INRIA Paris, Francis Bach

- 2020 - 2023: PhD student at University of Stuttgart, supervised by Ingo Steinwart

- 2016 - 2019: M.Sc. Computer Science, University of Stuttgart

- 2015 - 2019: B.Sc. Mathematics, University of Stuttgart

- 2013 - 2016: B.Sc. Computer Science, University of Stuttgart